System accuracy survey conducted in January 2025

January 4, 2025|M9 STUDIO INC. Research

1. Introduction

1.1 Purpose of the report

The last time we investigated AI translation accuracy was in August 2024. In this report, we report the latest translation accuracy results conducted on January 4, 2025 comprehensively examine the evolution and problems of video translation software during the period from August 2024 to January 2025. , clarifying the technical and structural challenges faced by each company.

Furthermore, by demonstrating the superiority of our M9 STUDIO, we will present comprehensive quality indicators required in the market .

1.2 Scope and target of verification

- Verification target software:

- Heygen

- RASK

- Elevenlabs

- WAVE A.I.

- Synthesia

- Our company M9 STUDIO (detailed analysis in the final chapter)

- Implementation details:

- English→Japanese (English-Japanese) : 3 speakers: mother, father, and main character (daughter)

- Japanese→English (Japanese-English) : Narration by one English speaker

- Evaluation axis : Translation accuracy (measured with Gemini January 2025 version), video perfection (audio/video synchronization, speaker assignment, pronunciation quality, etc.)

Video used for verification

All videos used for verification are licensed under Creative Commons (license free) (commercial use possible). The Japanese video was produced by the Consular Section of the U.S. Embassy's Public Affairs and Cultural Exchange Department (link) . The English video was produced by Sussex Humanities Lab (link) .

CC:License: Public Domain Dedication

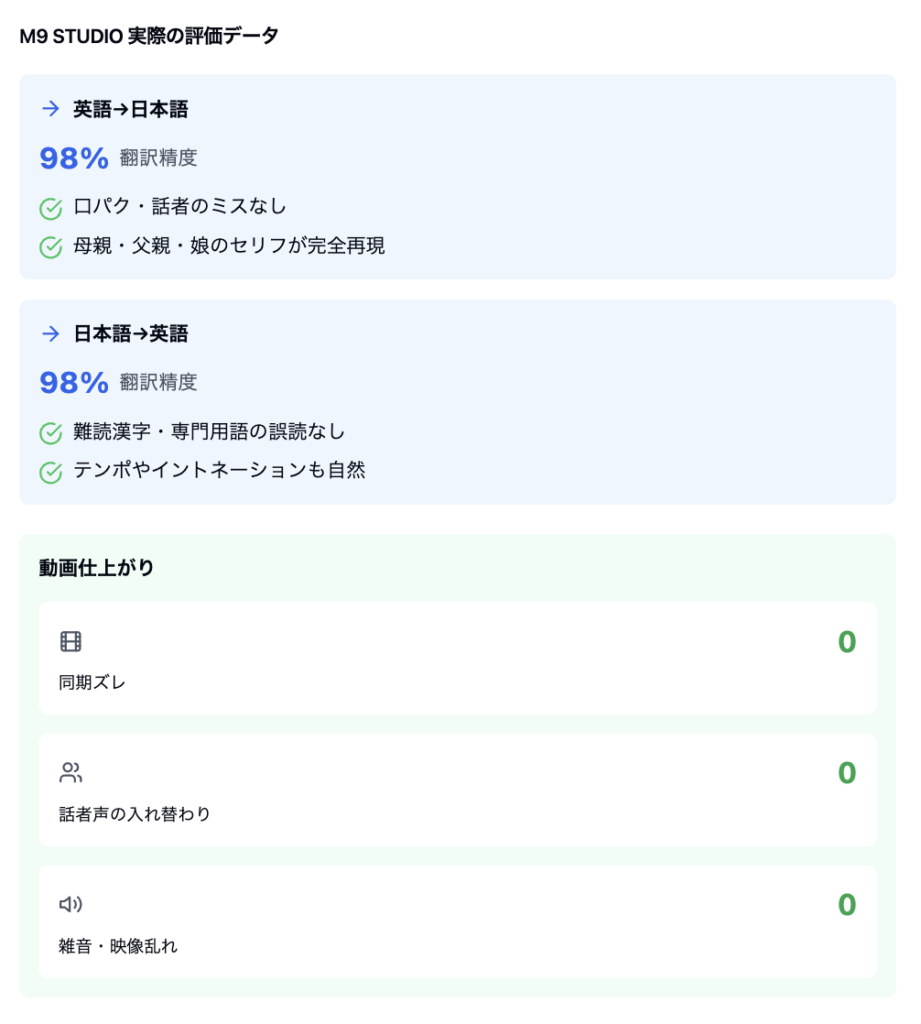

Translation results using the M9 STUDIO system

[Translation Accuracy] Achieves a high accuracy of over 98% at a practical level, providing stable translation quality in both English-Japanese and Japanese-English translations. In particular, it is excellent at understanding context and reproducing natural phrases, and is highly rated for business use.

[Main features]

Integrated multimodal architecture

Adopting a design concept that integrates translation audio synthesis and video synchronization, each element works in harmony with each other. This allows the improved translation accuracy to maintain high quality output as a whole without compromising the performance of other elements.

Intelligent speaker recognition system

A uniquely developed AI algorithm accurately grasps the characteristics of multiple characters and maintains the characteristics of each character's voice quality and speaking style. For example, in a family conversation scene, natural dialogue is reproduced without sacrificing the individuality of the voices of the mother, father, and daughter.

Bilingual speech generation engine

We have achieved native-level pronunciation quality in both Japanese and English. Particularly in Japanese, it is able to read foreign words such as "data" and "computer" as well as complex kanji, making it possible to output audio without any discomfort.

High precision video synchronization system

Automatically checks frame-level synchronization of mouth movements and audio to proactively detect and correct misalignment and audio quality issues. By combining automatic checks with AI and detailed checks by humans, we achieve the highest level of quality control.

Detailed verification results of other companies' software

Here, I tried translating a video under exactly the same conditions using a video translation tool that has a large share worldwide.

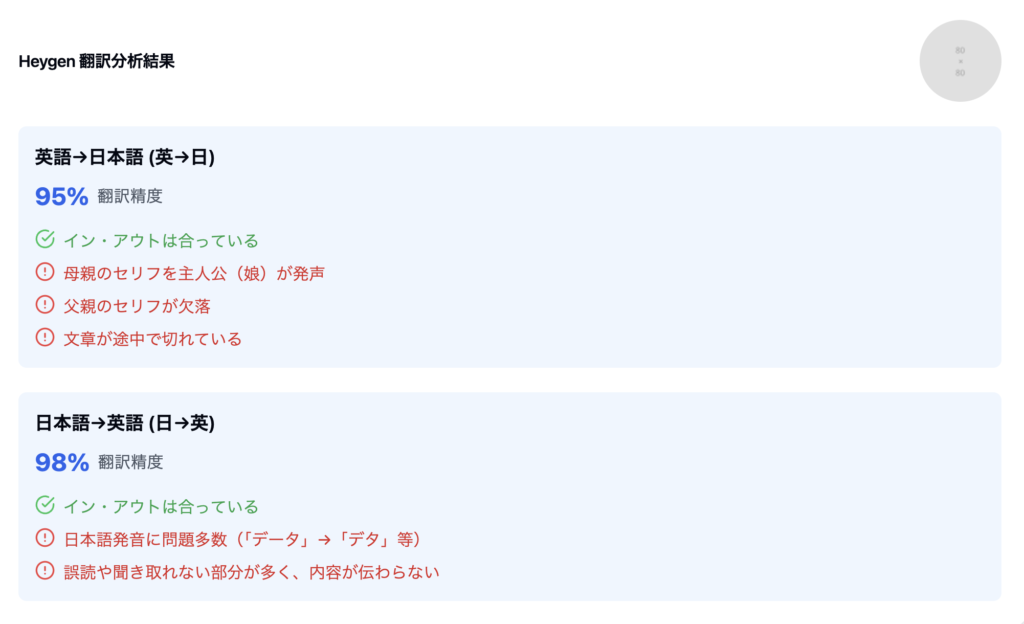

2-1. Heygen

Main issues

- English → Japanese : The speaker assignment is disrupted, such as the daughter speaking the mother's lines and the father's lines remaining unspoken.

- Japanese → English : There are many misreadings of kanji and broken grammar, making it unclear what is being said.

Overall review : Although the text accuracy of the translation is high, there are problems with speaker management and pronunciation quality, and there is a major failure in the quality of the video

2-2.RASK

2-3.Elevenlabs

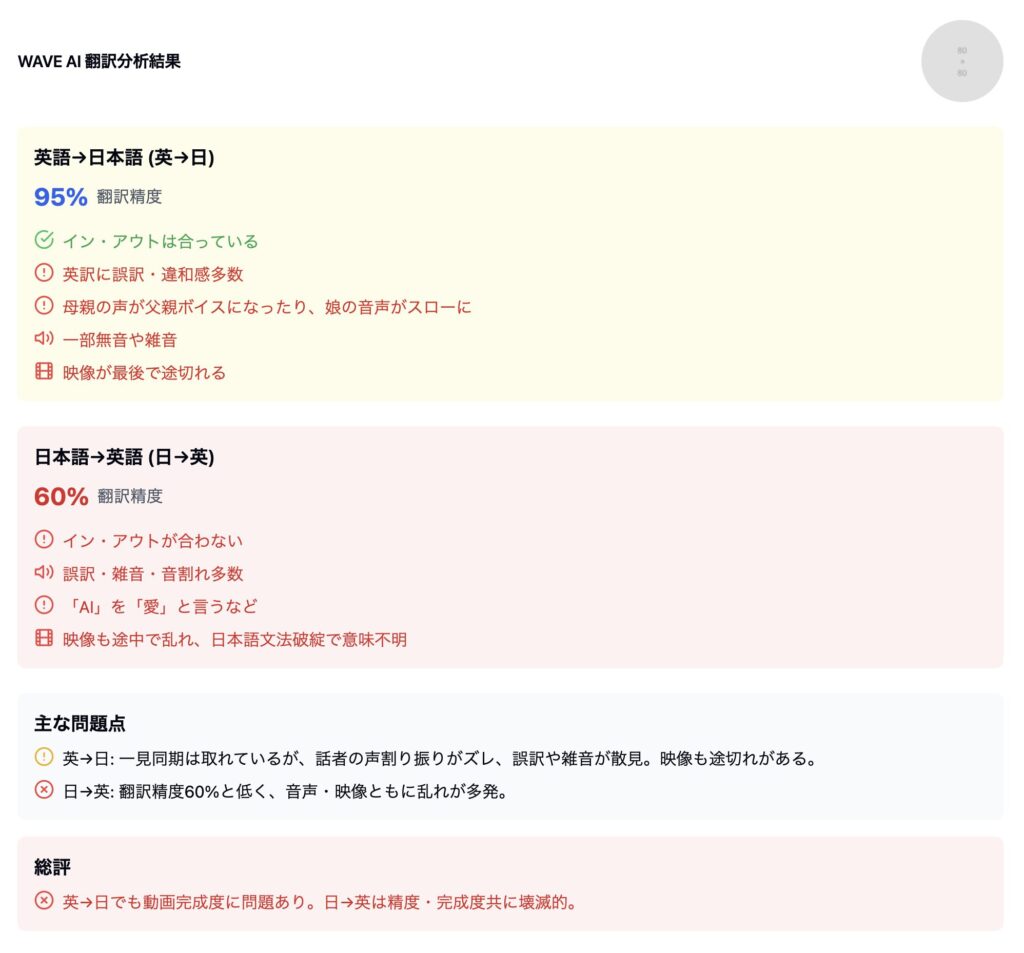

2-4. WAVE AI

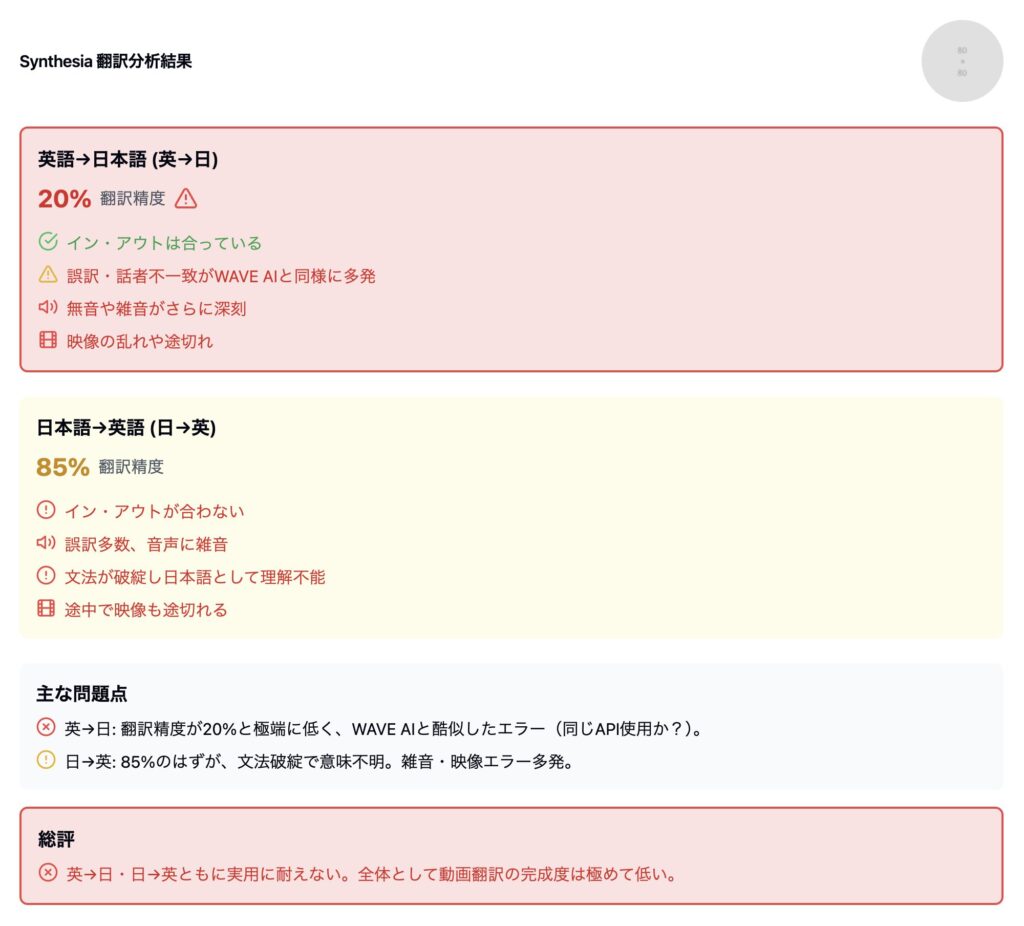

2-5. Synthesia

3. Our company: M9 STUDIO verification results

The following is the result of translating a video using our system.

Based on the completed video, we used the latest version of Vertex to determine accuracy and overall evaluation, similar to other companies' products.

Main features of M9 system

- Consistency in multimodal design

- From the early stages of development, the architecture was built on the premise of the trinity of "translation," "speech synthesis," and "video synchronization."

- We have built a system to integratedly manage the entire process so that the video side will not fail even if we improve translation accuracy

- High-accuracy speaker identification/speaker switching

- A unique algorithm analyzes the characters and automatically matches the correct voice quality and timing

- The speech speed and intonation of mothers, fathers, and daughters are individually optimized.

- Native-like pronunciation synthesis for both Japanese and English

- Automatically learns special pronunciations such as "data" and "computer" to achieve natural and correct reading

- It is also strong in reading kanji and processing grammar, making it difficult to break down.

- Automate synchronization test with video

- We check lip sync and audio synchronization on a frame-by-frame basis lag, silence, and noise to almost 0.

- High quality is guaranteed through a hybrid of AI verification and human verification.

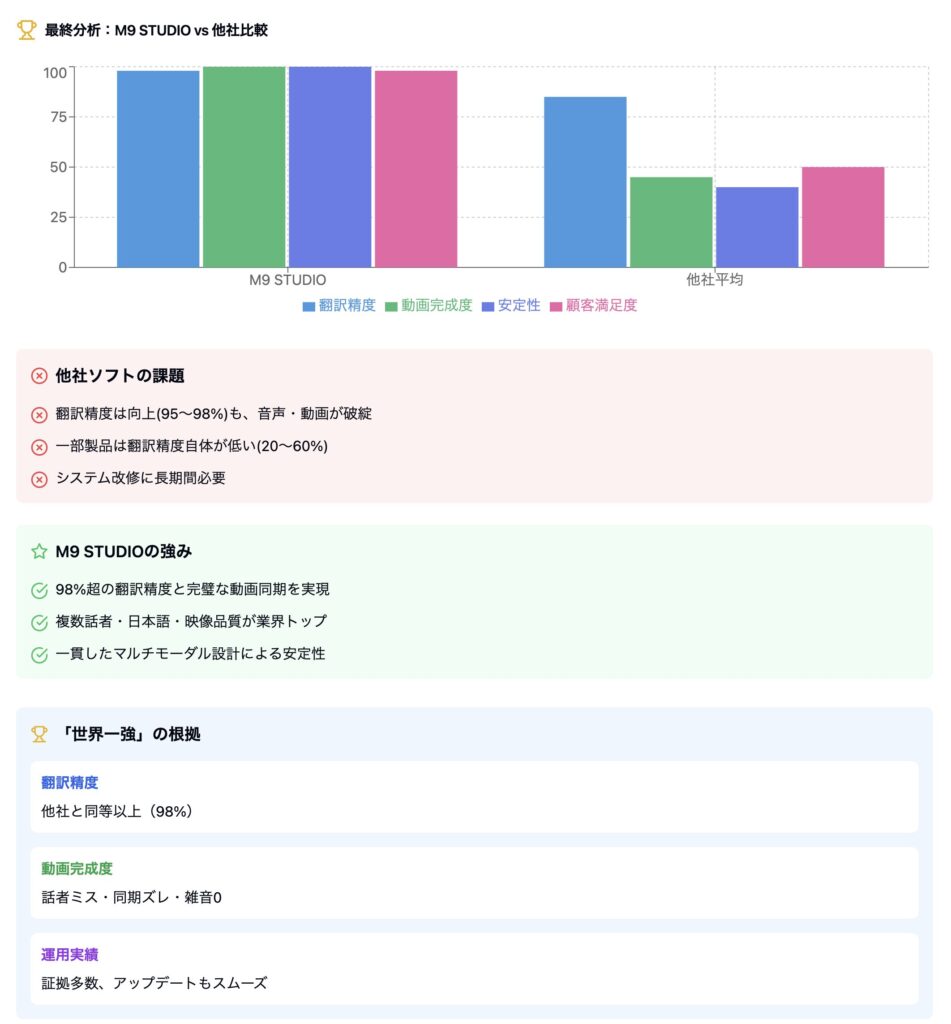

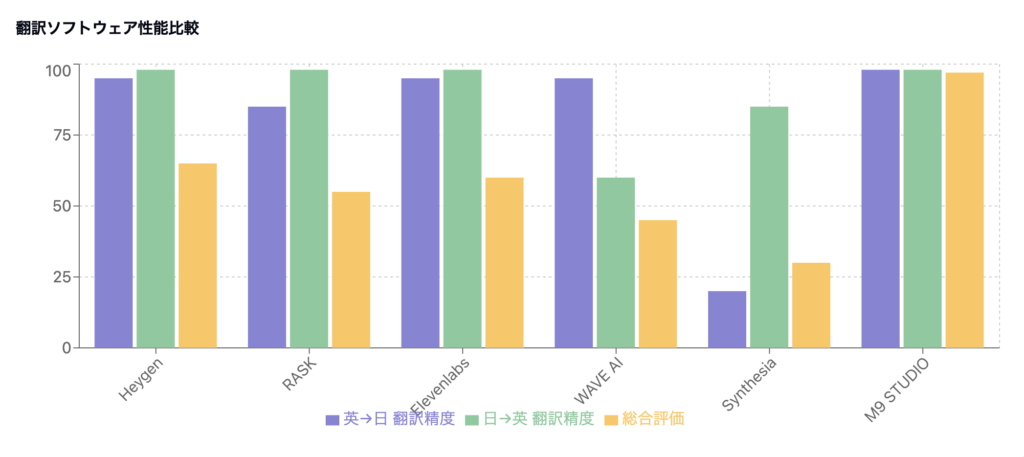

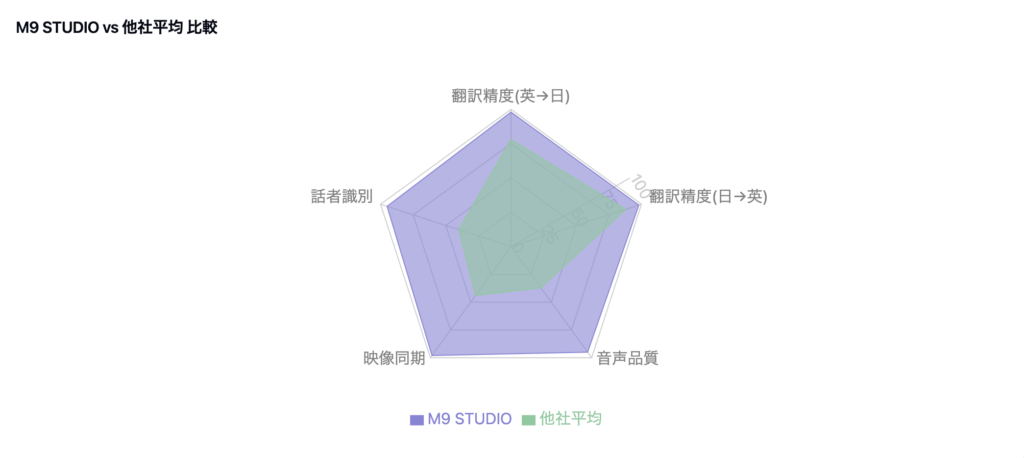

Overall comparison table

Based on the results thus far, we have summarized each company's system and comprehensive evaluation.

Note : “Translation accuracy” is a comprehensive index that combines Gemini numbers + actual language compatibility.

“Video completeness” is a comprehensive evaluation of speaker assignment, voice synthesis quality, video disturbance/length deviation, etc.

4. Technical changes in the AI industry from August 2024 to January 2025

4.1 Rapid increase in translation accuracy

- As of August 2024 : Average translation accuracy of each company is approximately 85% (top 90-95%)

- As of January 2025: Most products reach 95-98%

Cause analysis

- Integrating large language models

- A new AI engine (such as Gemini) has been introduced, dramatically improving character-based translation accuracy.

- Emphasis on accuracy due to competitive pressure

- Since translation accuracy tends to be an indicator of market evaluation, priority was given to development that would increase the "numbers" in the short term

4.2 Decrease in video quality

- There are many fatal errors such as the main character speaking the mother's lines and the father's lines being omitted.

- Japanese due to mispronunciation (such as "data" → "data")

- Video length and audio timing do not match, noise and cracking are increasing

Typical example: Case of a famous overseas translation system

- August 2024 : Translation accuracy 90-95%, video itself had few problems

- January 2025: Translation accuracy increased to 98%

- However, a fatal synchronization error occurred where the main character uttered the mother's lines.

- In exchange for improved translation accuracy, video quality drops significantly

Trade-off background

- While language processing models have been strengthened improvements to the functions that control voice generation, speaker identification, and video synchronization

- The typical trade-off of ``character level correctness'' vs. ``multimodal completeness''

5. Structural problems of each company's software

is a summary of the main problems found in other companies' products as of January 2025

5.1 Failure of speaker identification

- This is especially noticeable in cases with three speakers, such as when the daughter speaks the mother's lines

- There are many cases where the father's lines are missing or are uttered in the main character's voice.

- Cause : Insufficient speaker management algorithm and linkage design with speech synthesis engine.

5.2 Degradation of voice quality

- Errors in Japanese pronunciation : "data" → "data", misreading of kanji, etc.

- Erratic fluctuations in speech speed (suddenly going from slow to fast)

- Increased silence and noise, timing lag with video

- Cause : Japanese-specific reading logic and speed/intonation control are immature.

5.3 Architecture Separation

- Many companies are likely to develop separately

- Data inconsistencies between modules occur due to attempts to coordinate later

- the latest version of a large-scale language model , the risk of inconsistency with voice synthesis and video synchronization increases.

5.4 Insufficient quality control/testing

- the verification process , they focused on translation accuracy (numbers) and put off actual testing of video quality

- Advanced speaker identification and Japanese pronunciation were released without testing on actual devices, and problems were discovered only after receiving feedback from users

6. Detailed analysis of technical issues

6.1 Limitations of multimodal processing

- As translation accuracy has improved, the number of words and sentences have increased , and expressions have become more natural, but the length adjustment of videos has not kept up.

- If the output text of a large-scale model is used as is for synthesized speech, there will be noticeable discrepancies in speaking time and lip-syncing

6.2 Defects in speaker assignment

- When there are three or more speakers, existing algorithms cannot accurately track which person speaks which line .

- Because image recognition, voice recognition, and translation do not work together, confusion occurs, such as when the daughter's voice is played even when the mother is in the picture

6.3 Language processing unique to Japanese

- Since there are many English-speaking companies

- Mispronunciations such as "data" may be due to confusion in the processing of words such as "data" and "delta" within the system.

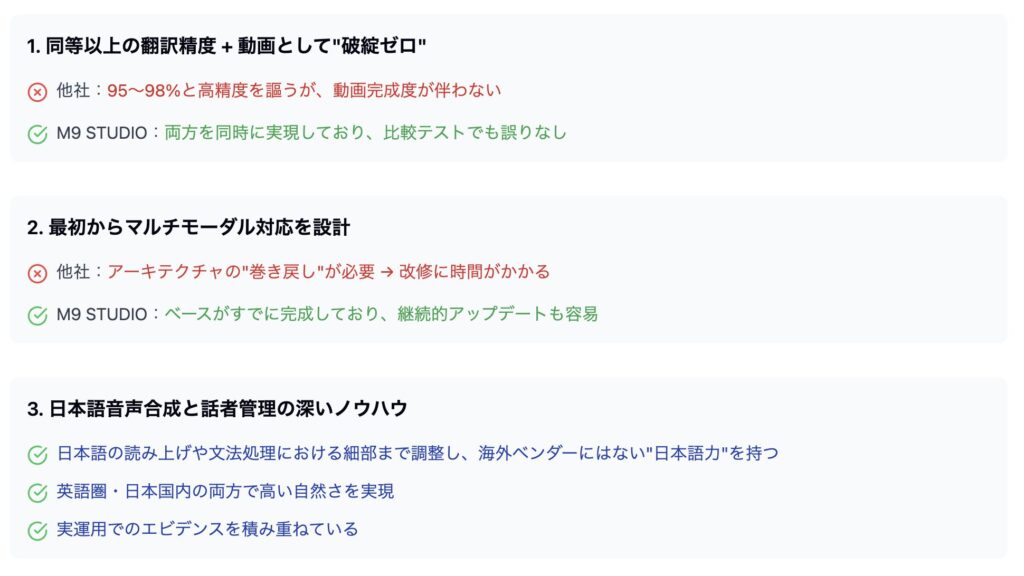

7. Why is M9 STUDIO’s technology so powerful?

At our company, we conduct research and development every day and independently update our products . As a result, it is possible to improve translation accuracy without being influenced by external APIs or AI engines

Many other companies' services are forced to "rewind" or make large-scale renovations in response to changes in the specifications of major AI models or APIs, but M9 STUDIO manages the core logic and algorithms in-house , so a short time. You can perform continuous tuning with This results in

- Immediately reflect necessary new features and accuracy enhancements

- Maintains stable quality and video synchronization

- Quickly translate user feedback

Benefits such as this will arise.

one of the biggest reasons why M9 STUDIO is said to be one of the best able to constantly incorporate the latest AI technology and achieve unique evolution without sacrificing the "perfection of the video."

Memo|Are there fewer failures in Spanish and French?

However, English → Spanish (or English → French/English → Chinese), it is assumed that cases where the video fails as in this case are not as pronounced as in Japanese.

The reason for this is that English-speaking development companies often focus on development and tuning for English⇔Spanish/English⇔French/English⇔Chinese. (response to) tends to be postponed, and as a result, unnatural translations and speech synthesis in Japanese tend to occur.

Another reason why translations between Japanese and foreign languages fail is that English and many European languages similarities in word order and sentence structure , making it easy to translate each other even with large-scale models. A major factor is that the Japanese model is unable to fully accommodate this, as the grammatical structure of Japanese is very different, such as the subject being omitted and the nuances changing greatly depending on the particle

8. Advantages of our system

8.1 Integrated architectural design

- Translation → audio → video is a consistent quality control system .

- Checks consistency in real time and performs synchronization management on a frame-by-frame basis.

- From August 2024 onwards, we will continue to strengthen our synthetic voice and speaker management modules

8.2 Precise control of speaker identification technology

- Scenarios and voices are learned in advance using uniquely developed algorithm

- individually optimized speech synthesis engines are assigned, mistakes such as jumbled lines are almost never made.

7.3 Deep support for Japanese

- Japanese-specific pronunciation (long sounds, consonants, special readings, etc.).

- We use our own dictionary and accent dictionary to correctly distinguish between words such as "data" and "computer."

- While maintaining translation accuracy of over 98% for both English and Japanese the quality of voice utterances is also high .

8.4 Evidence for practical application

- Accuracy verification using the latest version of Vertex as of 2025 also resulted in evaluations of ``as natural as a native language'' and ``zero misassignment of multiple speakers.''

- Because the product is highly complete, no need for “backward” development , and the risk of failure with each update is low.

Memo|Issues in overseas software introduction cases

In recent years, some Japanese companies and services have introduced foreign software that has a high share of video translation services

For example, some Japanese services and companies use a certain famous translation API. However, as the results of this verification show, fatal problems in creating translated videos based on Japanese (mixed speaker assignments, broken pronunciation/grammar, etc.), and the business for the Japanese market was not viable. You face no risk

AI development by Japanese companies often relies on large-scale language models and speech technologies from overseas. However, the pronunciation and grammar processing unique to Japanese , quality failures like this one are inevitable.

In this respect, we believe that our technology, which can achieve high accuracy and high quality in Japanese

9. Future outlook and impact on the market

As you can see from this data, it is difficult for other companies in the same industry to catch up after spending a huge amount of time and money on development. We will further report on the current situation as to why this is the case.

9.1 Possibility of comeback by other companies

- Fundamental redesign (architectural restructuring) is required.

- End-to-end improvements "translation," "speech synthesis," "video synchronization," and "speaker management" require significant resources and time.

- it will be difficult to solve the problem even if it is modified in a hybrid manner .

9.2 Increasing quality requirements

- While the demand for video translation in the market is increasing, users are beginning to realize that ``high translation accuracy alone is not enough.''

- Demand for overall quality such as natural audio, video synchronization, and speaker matching

9.3 Value brought by M9 STUDIO

- High-quality final products improve end-user satisfaction and brand trust (currently there are no video translation tools that exceed our level).

- there is no burden of retrofitting modifications , operational costs and support costs are reduced.

- As large-scale AI models continue to evolve, flexible and stable updates will be possible.

[Important] Regarding the safety of AI video translation tools

The reason why Japanese companies have been slow in introducing AI is that many companies are concerned that their own data will be used as learning information for AI. there are still concerns that such data learning may be used in video translation software made overseas

For example, in the case of a major company like Panasonic, they introduced Anthropic products ( announced on January 8, 2025 at CES that they had entered into a strategic agreement ), In order to avoid risks, the key is deciding what kind of AI to incorporate.

*Anthropic was created due to concerns about the security of Open AI ( materials )

However, with M9's AI video translation tool, we never divert your company information or data to our model learning

At our company, we have placed great importance on this issue since the beginning of the introduction of AI, and we operate under strict policies that assume enterprise use when handling all data, including logs and translation results.

Data non-training settings

- Separation of training data

The videos and subtitle information you upload will be used only for translation processing and will not be used to retrain or improve the performance of our AI models. - opt-in

We do not have an "opt-in" option that allows us to acquire additional data and use it for learning, so there is no possibility that the data will be used unintentionally.

Log retention policy

- Access Restrictions and Encryption

Based on enterprise-level security standards, internal system communications and stored data are always encrypted and access privileges are limited. - Minimal and Strict Management

Even if it is necessary to save logs for failure analysis, etc., logs will be retained at a minimum and for a limited period of time, and will be safely deleted when they are no longer needed.

For your peace of mind

- Administrator privileges and audit logs

You can exercise administrator privileges and check audit logs of usage status. In the unlikely event that there is any suspicious access, we can respond quickly. - Dedicated support system

From before to after implementation, our dedicated support staff will respond to your company's requests and questions in detail, and will also explain security measures at any time.

At M9, we always design and operate our services with strict protection of our customers' important information as our top priority. Please feel free to use our AI video translation tool.

conclusion